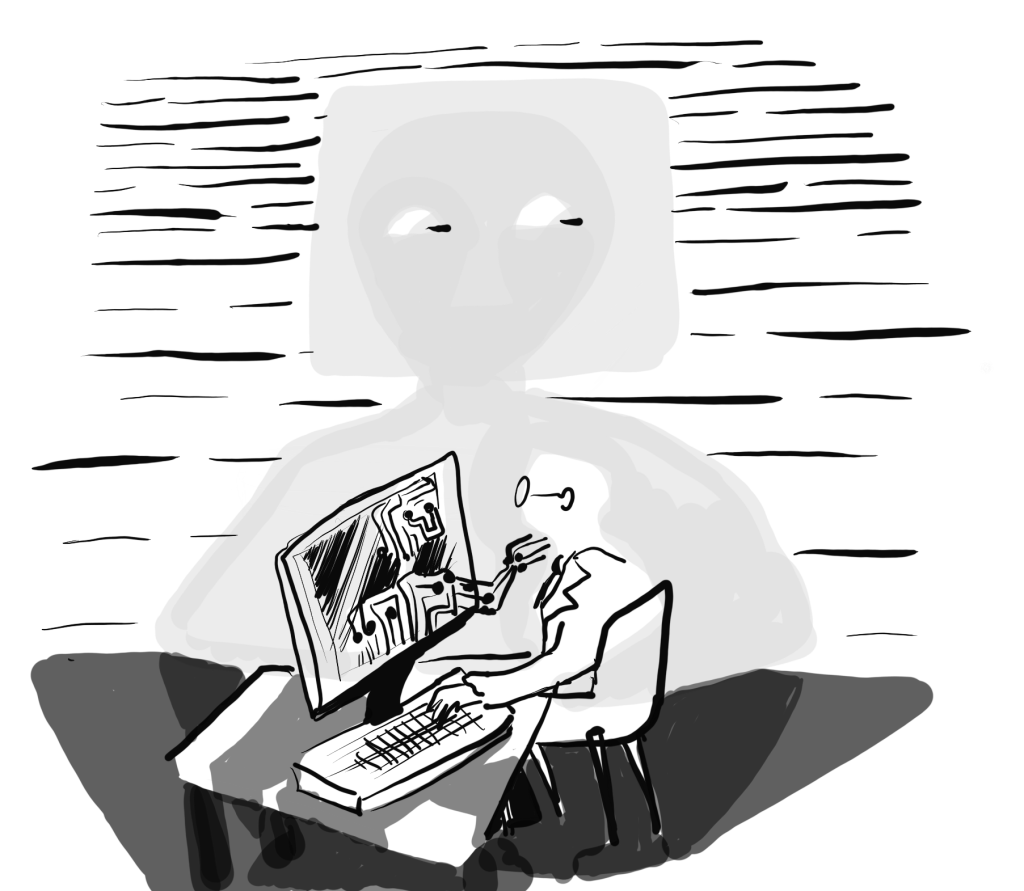

Illustration by Carcazan

The AI race to human intelligence

La(S)imo: So, before our little holiday break, we left at the underlying question of AI development direction: emulate or simulate human intelligence?

(C)arcazan: Yes…

S: Which somehow echoes the yearning for an infinite intelligence in which we mirror ourselves, but also the dangerous anthropomorphising of AI engines. Clearly, the answer is not to put everything on hold — i.e. until regulations are in place, or until a collective ethics is agreed upon.

C: Not putting things on hold until then?

S: …More like in Coelo’s ‘The Alchemist’, continuing the journey while keeping both goals up — progress with an active eye on collective good.

C: But then that risks creating some “beasts” without the ethical input or agreed parameters including impact on others’ rights, whilst creating the abiding versions… Either AI starts at a level-playing field or there’s little point in regulation. It would be like allowing LimeWire to have continued WHILST permitting the regulated sharing sites to operate…

S: There is no easy way to achieve any balance by forbidding research in the area. Of course, once regulations are introduced, existing tools should be aligned. The question remains, though: the more complex neural networks grow, the more AI becomes a black box of which we can only assess the outcome — without knowing exactly how it got there. How do we ensure that the user retains their critical thinking? This, of course, assuming that the research continues to focus in the current approach, which is investing very little in AI alignment.

C: I think research is one thing, and obviously to conduct research you should be able to use or test the thing you’re researching — however, I feel this is quite distinct from releasing it for full public use as a form of research, when the potential and actual hazards or rights infringements are already being challenged and raised… The question of how users retain their critical thinking is key to the kind of regulation and ethical frameworks around AI which have just not yet been developed — that’s where users will know what’s OK and what is not as an absolute basic starting point.

S: The problem is that the big research is in the hands of private corporates — the most powerful AI engines are developed by those who seek to profit from them. The academia is running behind. So the decision to release is of course made by the very same who invest in it. With regards to regulation, well, it will not by itself restore or nurture critical thinking. Along with adequate education, they might.

C: Regulation can and should include the need for education for everyone on this, whether in schools or as part of using AI in certain fields (that could be even via a user agreement when uploading or opening it for the first time..). However, once people are aware that there is something out there which basically says a product has to abide by certain standards, or can’t just run free, then the subconscious considers that an ethical process around being a user actually exists, and so appreciates that it is not as innocuous as just trying to make pretty pictures or whatever… I agree it’s impossible to make all users think critically about it — but say a user agreement flashed up before you could even access AI in certain fields in particular — but that would need the buy-in of the producers as well…

S: There is a time gap, unavoidable, between the introduction of a regulation and its successful implementation and goal achievements. The education system is already suffering lack of ICT expertise in their teachers aged 45+ — with the teachers themselves reporting that — which means that we need also interim pragmatism. As for user agreements, I am so tired of them! I used to read them all, to subscribe to nothing before reading, but now… it would be a fulltime job! User agreement of popular services seem to have become a disclaimer for the dirty stuff — there is no user leverage either way, not on small numbers, at least.

C: It’s often the case that effective laws follow what happens because something happened. And this happened… so while there is a lot of effort from many forces, it is also important to get it right.. at least companies can say the user agreements are there. Did you see that the so-called “Godfather of AI” has his own concerns?

S: Yes, alarming, isn’t it? A painful confirmation that something is going wrong. So other than regulations and petitions, what do we have? Of course, itching each other is another way 🙂

C: Yes and raising awareness..

S: Still, big numbers are vulnerable — the system operator, the public officer implementing the measures output by an AI that calculated the risk of recidivism, for instance — the receivers of a complex decision whose assessment or challenging of correctness is beyond their abilities. AI redefines the challenges of testing — because it is literally impossible to test all scenarios of a system whose next outcome always depends on its history.

C: That’s true — the cat is out of the bag, so the question is how do we manage and train it? Then again it was designed to be out of the bag, the quandary is having as quick enough answer as we can for it while it mutates everyday — so, like human laws, you can only ever legislate for what you know already and think is likely. The human brain will always come in up with something unforeseeable and we react, that’s human nature and it will be the same for AI… Perhaps the real issue is that people see AI as a human robot instead of realising that true responsibility lies with the data-set selection and inputters…

S: Educating to see an AI engine as what it is — artificial — would indeed be step one. What if for our next appointment we research for ways — what has been done (methods, initiatives) and what could be done — Frankenstein ideas, mosaics and fabrics made from apparently unrelated threads?

C: I love this idea!!! Suggesting beyond criticism! Awesome! Constructive! Deal!