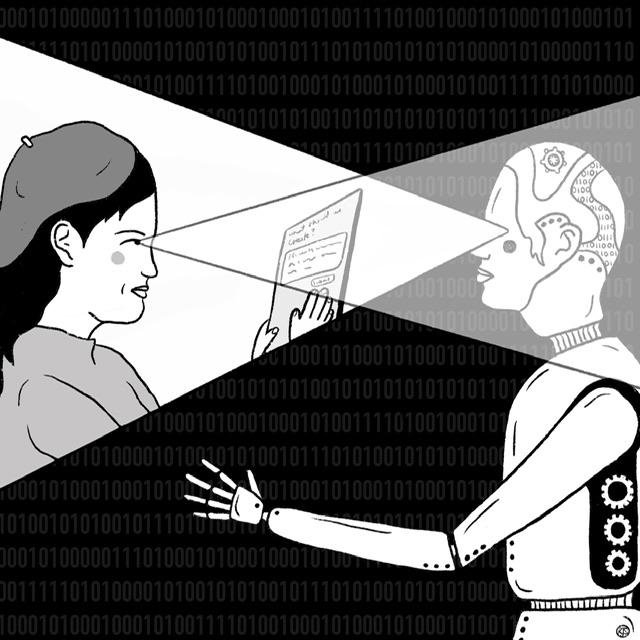

Illustration by Kellie Simms

The Site of Meaning in AI production

(C)arcazan: So LaSimo, what exactly do you mean by the site of AI production?

La(S)imo: Ahh, glad you ask. I see the site of meaning as the invisible place where the meaning is born, or is made. As an author, meaning is rooted in your intentions, in the memories and feelings associated with your artifact. And then, as a viewer or a reader, you have your own chance to produce meaning too — as Pullman’s puts it, “the democratic nature of reading”. But if (as we agreed) AI never had a real intention for meaning, is the viewer/reader the sole king of meaning? No dialogue? No questioning?

C: Well AI has a meaning but as a result not as a catalyst: if intention is rooted in the maker, then to me the maker in an AI context is the one who creates and programmes AI to generate when prompted… For me, meaning and purpose are linked: meaning is the what, whereas purpose is the why..

S: Saying that the creator of the algorithm is the owner of AI production is like saying that your parents are of yours. I am not so sure I agree… And meaning is never the result, anyway, not unless you consider the visible dimension of the artifact as synonym of its meaning, which we should not…

C: We did discuss that AI can only respond and has no soul — that doesn’t sound like an autonomous child of parents, whatever that child may be taught. The what and purpose of AI may be discernable from its stated aims, actual context, its functions and its effects.

S: Fair. Though the main food of AI is data. The algorithm decides what are the rules — statistics, ethics, and all. The thing is, the big absent in the meaning-making is the Audience. If you do not consider the recipient of your work (which sure we agree AI does not), then what you are doing is not Art, it is a personal journal. Which of course in the case of AI makes no sense, unless we romanticise AI thinking of it as a truly independent actor — unlikely a valid argument, since the algorithm evolves through its owner’s roadmap and in turn, through the software creator. Assuming that AI use won’t stop, how do we invite a collective, new way of meaning-making, as opposed to a risk of individualist drift?

C: Working backwards, isn’t ‘individualist drift’ something that one expects from human artists? The risk of AI art is it homogenises expression, suppresses individualism and quietly redefines cultural identity and development under the guise of accessibility. I don’t agree the algorithm decides what are the rules and ethics, it only decides how to apply what it is programmed with. For example, look at the Foxglove work to out the UK Home Office’s algorithm which flagged certain countries as being higher risk than others, which in turn refused many visa applications apparently incorrectly. They decided who were “risk’ countries and the algorithm applied it to incoming applications. That’s why who makes the rules is definitive as to intention and then purpose.

S: By individualist drift, I meant the risk that each individual reader/viewer would make their own meaning, in isolation, without an option to appeal, since (precisely as you say) there is no individual (not in human terms, at least) behind the AI author of the work. With regards to your point about the labelling of high-risk countries, this is precisely the risk of statistical data usage assuming data as objectively true. In other words, there is nothing inherently evil in taking two sets of flowers picked from one field and saying that there is a better chance to find the type that is (in your bouquets) more numerous. But how was the selection made? We must consider the position of the field, the preferences of the flower picker, the season, the latitude, and so on. So, as you see, the detonation happens not in the data alone, not in the algorithm alone, but in their dangerous union.

C: And that’s why I’m always questioning why — with the Metaverse or its non-Zuckerberg equivalents — I have to question why do they want to recreate the world instead of investing in or working towards fixing the one we’re actually in? I read an article in the Economist that investors are apparently falling over themselves to invest in AI because they just have too much money basically — and I ask, why? When you look at who is in this rush, it’s basically the Silicone Valley boys vying for bigger toys.. why don’t they invest in renewable energy, or better sustainability infrastructure? Or hospitals? Or developing countries in terms of food, aid or clean water? Or cleaning the seas? There’s plenty to invest in beyond ways of rendering millions technologically bound and then dependant on their products some of which only benefit the maker.

S: Yeah, well, we know the answer to that. More potential in relation to their own priorities — in which money plays no secondary role. And I say this fully aware that there is also a lot of good coming from AI (health and medicine, for instance). So, if we stay pragmatic, and we should, how do we go from here? We are not on the side of creators — we are users, receivers (at best, active receivers) of AI development. So what shall our role as authors, educators, thinkers be?

C: Yes, and I don’t say a word about the AI which actually benefits people like in those contexts you mention. That is quite separate but should still be monitored for effectiveness and benefit. I just think that our role is to evaluate whether something that appears innocuous or useful, actually is in the context of how we advance as a people made of true individuals. For example, many believe AI in art is just another tool and we should chill out and get used to it. I say a tool is something which benefits the development of the user, which I don’t believe AI art does. So I can’t call it a tool like I would for a digital pen or some drawing software.

C: I also say the context of technological development occurs in an era where personal data and information are the new currencies which give true power — the data economy if you like. And they who hold the key to how data and information is available, hold the power. For instance, he who shall not be named allegedly wants to invent a ChatGPT which is ‘less woke’ — and people will rely on the ‘answers’ it gives as what counts as the truth or ‘information’

S: What you would or would not call a tool, I am afraid crosses the line of personal positions. I mean, is developing a digital pen more urgent than seeking solution to some of the more pressing topics that you listed? Resources are unevenly and unfairly distributed among decision makers (democracy in any self-labelled “democratic country” does not work very well, I am sure we agree on that). Indeed, the use of data, the availability of data, should be better controlled and monitored. But we all got so used to free services — Whatsapp, gmail, Signal, to name a few — which somehow is our silent agreement to sharing our own communication contents with those willing to make it travel for free. So, we are the first complicit of this system.

C: We’re only complicit if we know what really happens to the data but either don’t care or consent — and then is consent complicity? That’s another debate. AI art is to me not a tool because artists become ‘promptors’ or co-creators, we and drawing on our work becomes the tools for AI… Khalil Gibran once spoke of ‘life’s longing for itself’. Maybe we should consider whether the debate around AI art is that of a life longing for itself’? And if so, whose life: artists’ or technological development?

S: Intrigued… But I definitely need to do some serious thinking before next week!